Augmented Reality Overview

Tuesday, June 23, 2009 at 7:34PM

Tuesday, June 23, 2009 at 7:34PM Many of the links in this article are for video demos. Rather than having a string of 30+ videos cluttering and breaking up the article, I’ve chosen to set up a separate video page. When you click a video link, it will open a second window. You can view the related video as well as navigate all of the other videos from this window. If your monitor is large enough to permit, I would even suggest leaving the second window open for the videos to cue each video when needed, as you read through the article. To differentiate the video links from other links, links to videos are each followed by a “¤”. To open the window now, click here ¤.

While social media in general, and Facebook and Twitter specifically, have been monopolizing mainstream media’s coverage of online trends, augmented reality is getting a lot of inside-the-industry exposure, mostly for its undeniable wow factor. But that wow factor is a double edged sword, and advertising has a way of turning trends into fads, just before they move on to the next brand new thing. So for this article I wish to focus on practical applications and augmented reality with clear end user benefits. I’ve deliberately chosen not to address entertainment and gaming related executions as it is beyond the scope of this article and frankly merits dedicated attention all its own. And perhaps I’ll do just that in a future article.

Can it Save the Car?

The automotive industry was an early adopter. Due to the manufacturing process, the CAD models already exist and the technology is very well adaptive to showing off an automobile from a god’s eye view. Mini ¤ may have been first off the pole position, with Toyota ¤, Nissan ¤ and BMW ¤ tailgating close behind. Some implementation of AR will soon replace (or augment) the “car customizer” feature that is in some form standard on all automobile websites.

The kind of augmentation that is so applicable to automotive is also readily adaptable to many other forms of retail. Lego ¤ is experimenting with in store kiosks that feature the assembled kit when the respective box is held before the camera. Because legos are a “kit” the technology is very applicable in-store, however I find the eCommerce opportunities much more compelling. Ray-Ban ¤ has developed a “virtual mirror” that lets you try on virtual sunglasses from their website. Holition ¤ is marketing a similar implementation for jewelry and watches. HairArt is a virtual hairstyle simulator developed for FHI Heat ¤, maker of hair-care products and hairstyling tools. While demonstrating potential, some attempts are less successful ¤ than others (edit: I just learned of a better execution of an AR Dressing Room by Fraunhofer Institut). One of the most practical, useful implementations I’ve seen is for the US Post Office ¤— A flat rate shipping box simulator (best seen). These kind of demonstration and customization applications will soon be pervasive in the eCommerce space and in retail environments. TOK&STOK ¤, a major Brazilian furniture retailer, is using in-store kiosks to view furniture arrangements, though I personally find theirs to be a poor implementation. A better method would be to use the same symbol tags to place the AR objects right into your home, from the camera connected to your PC. And that’s just what one student creative team has proposed as an IKEA ¤ entry for their Future Lions submission at this years Cannes Lions Advertising Festival. A quite sophisticated version of this same concept has also been developed by Seac02 ¤ of Italy.

To Tag, or not to Tag?

A couple of years ago I wrote here about QR codes. A couple of weeks ago, while attending the Creativity and Technology Expo, I was given a private demo of Nokia’s Point & Find ¤. This is basically the same technology as QR codes, but uses a more advanced image recognition that doesn’t require the code. Candidly I wasn’t terribly impressed. The interface is poor and the implementation is so focused on selling to advertisers that they seemed oblivious to how people will actually want to use it, straightjacketing what could be a cool technology. Hopefully future versions will improve. Most implementations of augmented reality rely on one of two techniques— either a high degree of place-awareness, or some form of object recognition. Symbols similar to QR codes are most often used when the device is not place-aware, though some like Nokia’s Point & Find don’t require a symbol tag. Personally, even if the technology no longer requires it, I feel the symbol or tag-code is a better implementation when used for marketing. We are still far from a point where everything is tagged, so people won’t know to inspect if a tag-code is not present. Furthermore, the codes place around on posters and printed material help build awareness for the technology itself. Everything covered here thus far has been recognition based augmented reality.

Through the Looking Glass

Location-aware augmented reality usually refers to some form of navigational tool. This is particularly noteworthy with new applications coming to market for smartphones. As BlackBerry hits back at the iPhone, Android’s list of licensees grows and the Palm brings a genuine contender back to the table with the new Palm Pre, there is huge momentum in the smartphone market that not even the recession can slow down. I personally think the name “smartphone” is misleading as these devices are far beyond being a mere ‘phone’. Even a very smart one. They are full-on computers that, among many other features, happen to include a phone. In my prior article on augmented reality I focused on the iPhone’s addition of a magnetometer (digital compass). This gave the iPhone the final piece of spacial self-awareness needed to develop AR applications like those coming fast and furious to the Android platform. Think of it like this— The GPS makes the phone aware of its own longitudinal and latitudinal coordinates on the earth, the compass tells it which direction it is facing, and the accelerometer (digital level-meter) determines the phone’s degree from perpendicular to the ground (this is what lets the phone’s browser know whether to be in portrait or landscape mode). Through this combination of measures the device can determine precisely where in the world it is looking. There is already a fierce race to market in this highly competitive space. Applications like Mobilizy’s Wikitude ¤ (Android), Layar ¤ (Android) and other proof of concepts seeking funding like Enkin ¤ (Android) and SekaiCamera ¤ (iPhone) are jockeying for the mindshare of early adopters. Others have developed proprietary AR navigational apps such as IBM’s Seer ¤ (Android) for the 2009 Wimbledon games. Two months ago when Nine Inch Nails released their NIN Access ¤ iPhone app, there was no iPhone on the market with a built-in compass, so the capability for this level of augmentation was not yet available, but a look at the application’s “nearby” feature gives a hint at the kind of utility and community that could be built around a band or a brand using this kind of AR. View a demo of Loopt ¤, and only a little imagination is needed to see how social networking can be enhanced by place awareness, now add person-specific augmentation tied to a profile and the creepy stalker potential is brought to full fruition, depending on your perspective. And there are other well established players in the automotive navigation space that have a high potential for crossover. The addition of a compass to the iPhone paved the way for an app version of TomTom ¤. Not to be outdone, a Navigon ¤ press release has announced that they too have an iPhone app in development. How long before location-aware automotive navigation developers choose to enter the pedestrian navigation space?

Some Assembly Required

It seems everyone wants some AR business from IKEA. Another spec project by a student at the University of Singapore proposes an assembly instruction manual for IKEA ¤ furniture. In a more sophisticated application on the same line of thought, BMW ¤ is experimenting with augmented reality automotive maintenance and repair technology. Note in that video that he is not doing this in front of his laptop camera, nor is he holding his smartphone in front of his face. He’s wearing special AR eyewear. The potential for hands-free instruction and tutorial is as obvious as it is unlimited. Consider any product you purchase that comes with instructions (You do read the instructions, right?). A municipal construction crew repairing a broken water pipe could effectively have X-Ray vision, seeing where all the pipes are under the road, based on schematics supplied to their eyewear from city records.

Seeing is Believing

When it comes to Virtual Reality, I’ve had a mantra that none of this will really take off until we’re in there versus looking at there. I believe augmented reality will be the catalyst that pushes digital eyewear into the marketplace. Virtual World applications are, by their nature, not location dependent. In many ways that’s the point— you can be anywhere. And sitting at your computer or game console and looking at a screen is a well established all-purpose interface. Place-aware augmented reality, on the other hand, is location dependent— Walking down the street holding your smartphone in front of your face is not a long-term solution. In only a short couple of years, a bluetooth earpiece ¤ has gone from being the goofy guy walking down the street who looks like he’s talking to himself, to a common everyday accessory, even fashionable. What works for your ears is now coming to your eyes— a hands-free visual interface in the form of eyewear. Some variation of this concept has been around for a long time ¤. Slow to improve, even most contemporary models are less fashionable than a Geordi LaForge’s visor, but slowly they are improving.

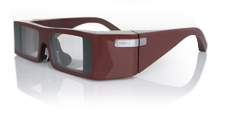

The Vuzix Wrap 920AV (at left), prototype premiered at the 2009 CES in Las Vegas, are the newest consumer class digital eyewear marketed for augmented reality applications. WIRED Magazine’s Gadget Lab feels their most significant feature, “comes from the fact that the company finally hired a designer aware of current aesthetic tastes.” Significant to the 920AV’s is that: A. They boast ‘see-thru’ video lens that readily lend themselves to augmented reality applications, and B. They are stereoscopic (meaning they have a separate video channel for each eye, required for 3D). They are meant to hit the market in the Fall, and are being pushed as an iPhone compatible device. If they are smart, they will do a bundled play with a “killer app” such as SekaiCamera or similar product. They have the potential to be the ‘must have’ gift for the 2009 holiday season. Not to oversell them, I have not personally demoed them yet so I don’t know if they will deliver on the hype, but they look as though they will be first to market, and their product will be the leading contender in the immediate future. Here is a demonstration of a prior Vuzix model ¤ (behold the fashion statement). Using symbol-tag based augmented reality, this man places a yacht in his living room.

The Vuzix Wrap 920AV (at left), prototype premiered at the 2009 CES in Las Vegas, are the newest consumer class digital eyewear marketed for augmented reality applications. WIRED Magazine’s Gadget Lab feels their most significant feature, “comes from the fact that the company finally hired a designer aware of current aesthetic tastes.” Significant to the 920AV’s is that: A. They boast ‘see-thru’ video lens that readily lend themselves to augmented reality applications, and B. They are stereoscopic (meaning they have a separate video channel for each eye, required for 3D). They are meant to hit the market in the Fall, and are being pushed as an iPhone compatible device. If they are smart, they will do a bundled play with a “killer app” such as SekaiCamera or similar product. They have the potential to be the ‘must have’ gift for the 2009 holiday season. Not to oversell them, I have not personally demoed them yet so I don’t know if they will deliver on the hype, but they look as though they will be first to market, and their product will be the leading contender in the immediate future. Here is a demonstration of a prior Vuzix model ¤ (behold the fashion statement). Using symbol-tag based augmented reality, this man places a yacht in his living room.

If the quality of the user experience fails to live up to expectations, Vuzix has many pretenders to the crown. Fast followers like Lumus (at left) and others are trying to get products to market as well. Then there are MyVu, Carl Zeiss, i-O Display Systems and others who have video eyewear products and are likely candidates to come forward with AR offerings. Add to that a technical patent awarded to Apple last year for an AR eyewear solution of their own and it is clear this could quickly become a crowded and competitive product category. This video titled Future of Education ¤, while speculative, is a splendidly produced and rather accurate projection of where the technology is going.

If the quality of the user experience fails to live up to expectations, Vuzix has many pretenders to the crown. Fast followers like Lumus (at left) and others are trying to get products to market as well. Then there are MyVu, Carl Zeiss, i-O Display Systems and others who have video eyewear products and are likely candidates to come forward with AR offerings. Add to that a technical patent awarded to Apple last year for an AR eyewear solution of their own and it is clear this could quickly become a crowded and competitive product category. This video titled Future of Education ¤, while speculative, is a splendidly produced and rather accurate projection of where the technology is going.

Where to From Here?

We’re moving in this direction at exponential speed, the pace of progress is only going to keep moving faster. As we see the convergence of augmented reality with mobile and mobile with ear and eyewear, there are another set of convergences just over the horizon. We’re on the threshold of realtime language translation ¤. This is an ingredient technology and, like a spell-checker, will soon be baked in to all communications devices, first of which will be our phones. The Nintendo Wii brought motion capture into our homes, and technologies like Microsoft’s Project Natal ¤ are converging motion capture with three dimensional optical recognition, so no device is needed. And everything, both real and virtual, will soon be integrated into the semantic web. Intelligent agents will assist us with many tasks. While most of this intelligence will occur behind the curtain, as humans we like to personify our technology. It won’t be long before our personal digital assistant could be given the human touch. How human?

NOTE: In the references below, I’ve included a list of firms that have created some of the pieces shown here or the technologies used.

AR,

AR,  Augmented Reality,

Augmented Reality,  eyewear,

eyewear,  smartphone in

smartphone in  Technology,

Technology,  transhumanism

transhumanism